Have you ever seen a product online or in the real world and wished you could instantly find it, or something similar, without typing a single word? This is the promise of visual search, a powerful technology powered by Artificial Intelligence that is changing how we discover products online. It can fundamentally shift information retrieval away from traditional, text-based search, allowing users to bypass the "keyword gap"—the common struggle of trying to describe something you can see but can't name. For e-commerce businesses, especially on platforms like Shopify, it represents a significant opportunity to enhance the customer experience, but it also comes with its own set of challenges and costs. Did you know, that 36% of consumers have already used visual search and over half say that visual information is more important than text when shopping online?

What is visual search and how does it work in practice?

Visual search is a technology that allows users to search online using an image as the query instead of text. Powered by artificial intelligence and computer vision, it analyzes an image's features—such as color, shape, and pattern—to find visually similar products, information, or other images from a large database.

Prominent, real-world examples like Google Lens and Pinterest Lens demonstrate its effectiveness in identifying objects like clothing, furniture, or plants directly from a photo. By using actions like snapping a picture or uploading an image, users can find items that are otherwise difficult to describe with words. This method bridges the gap between a moment of visual inspiration and a final online purchase, making the shopping experience more seamless.

Visual Search vs. Image Search: What's the Difference?

Though often used interchangeably, visual search and image search describe two different actions. The key distinction lies in the type of query used:

- Image Search is the traditional method where you use a text query (keywords) to find images. For example, typing "blue mid-century modern sofa" into a search engine to get pictures of sofas is an image search.

- Visual Search reverses this process. You use an image as the query to find information. For example, taking a photo of a blue sofa you see in a friend's home to find out where to buy it is a visual search.

In short, image search finds pictures using words, while visual search finds information using pictures.

Is Visual Search Right for Every E-commerce Store?

While powerful, visual search is not a universal solution. Its effectiveness is highly dependent on the industry. 85% of shoppers trust product images over descriptions, especially for categories like apparel or furniture. It provides immense value in categories where aesthetics and visual attributes are the primary purchase drivers, such as:

- Fashion and Apparel: Finding a specific dress pattern or style of shoe.

- Home Decor and Furniture: Identifying a piece of furniture from a photo.

- Beauty and Cosmetics: Matching a specific shade of makeup.

Conversely, its utility is minimal in industries where products are distinguished by technical specifications, model numbers, or textual information. For example, it is largely ineffective for selling electronics, software, books, or dietary supplements, where visual appearance is secondary to function and data.

What is the technology behind visual search?

At its core, visual search is powered by the interplay of two parent fields: artificial intelligence (AI) and its sub-discipline, computer vision (CV). These technologies work together to enable a machine to see, interpret, and understand the world through images, much like a human does. The process begins when a user provides an image input. From there, computer vision algorithms perform a critical step known as feature extraction. This involves identifying and isolating key visual data points within the image, such as textures and shapes. This digital 'fingerprint' is then processed by search algorithms and compared against a massive, indexed image database to find items with the most similar features, delivering highly relevant results back to the user in seconds.

How AI and Machine Learning Analyze Images

To understand how visual search works, we need to look at the powerful technologies that enable a machine to interpret images. Artificial intelligence (AI) serves as the broad foundation, giving machines the capacity for human-like intelligence. Within this field, computer vision (CV) is the specialized area focused entirely on processing and understanding visual data from the digital world. However, the true engine driving modern visual search is deep learning, a subset of machine learning (ML) that dramatically improves recognition accuracy.

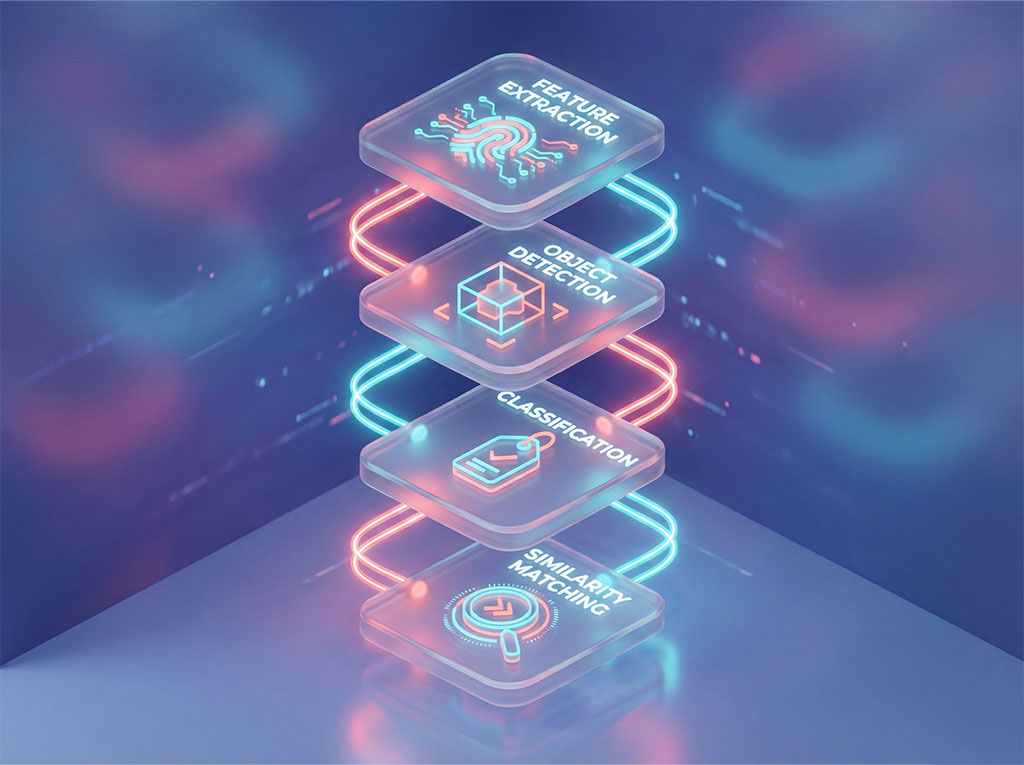

Deep learning models, particularly Convolutional Neural Networks (CNNs), are designed to mimic the human brain's visual cortex. While highly effective, training and deploying these models requires massive datasets and significant computational power, representing a substantial initial investment. The process involves several key tasks:

- Feature Extraction: The process begins by deconstructing the query image into its core visual characteristics. Algorithms identify and isolate key data points like dominant colors, specific shapes, textures, patterns, and even the spatial relationship between different elements. This creates a unique digital signature for the image.

- Object Detection: Going a step further than simple analysis, object detection algorithms localize and identify specific items within a photograph. This is what allows a user to select a single chair in a fully furnished room and search for that item alone, ignoring the surrounding decor.

- Image Classification: Next, the system categorizes the image or the detected objects. It might classify an image as "apparel," then "footwear," and finally "men's leather boots," adding layers of understanding.

- Similarity Matching: With a detailed digital signature created and classified, the system then executes the search. It compares the query image's features against a massive, pre-indexed image database to find and rank the closest visual matches based on a similarity score.

This entire process is further refined by two supporting technologies. Automated product tagging uses AI to assign relevant metadata and descriptive tags to every image in the database, making the retrieval process faster and more efficient. Furthermore, semantic search capabilities help the system understand the context behind the image, ensuring the results are not just visually similar but also contextually relevant.

Technological and Financial Realities: Beyond the Hype

While the promise of visual search is compelling, a successful implementation requires confronting significant technical and financial challenges that go far beyond a simple software plugin.

- High Infrastructure Costs: Effective visual search is computationally expensive. Extracting features from every product image in your catalog and indexing them for fast retrieval requires immense processing power, often relying on costly GPU servers. For large, dynamic catalogs, these operational costs for maintenance and re-indexing can be substantial.

- The Accuracy Dilemma: The metrics of "Precision and Recall" are crucial, but they don't capture the full user experience. When a visual search system fails, it doesn't just return no results; it can return absurd or completely irrelevant items. This can be far more frustrating for a user than a failed text search and can actively damage their perception of your brand. A system that is 80% accurate may still be 100% frustrating if the remaining 20% of results are nonsensical.

- Complex Scalability: The brief mention of "sophisticated solutions" in most articles hides a complex reality. Maintaining speed and accuracy for a growing database requires specialized infrastructure like vector databases (e.g., Milvus, Pinecone) and a dedicated MLOps (Machine Learning Operations) team to manage, monitor, and retrain the AI models. This is a serious technical commitment, not a set-it-and-forget-it feature.

How Can a Business Optimize for Visual Search?

While implementing a visual search tool is the first step, you can also optimize your website's images to improve their visibility in external visual search engines like Google Lens. The goal is to provide as much context as possible, so these engines can understand your products. Key strategies include:

- Use High-Quality Images: Provide clear, high-resolution photos from multiple angles on a clean background. The better the image quality, the more accurately AI can identify its features.

- Implement Descriptive Alt Text: Write clear, descriptive alt text for every product image. This not only helps with accessibility but also gives search engines a textual description of the image's content.

- Leverage Structured Data: Use Schema.org markup (specifically Product, ImageObject, and Offer types) to label key product information like name, price, brand, and availability. This gives search engines explicit context about the item in the image.

- Optimize File Names and URLs: Use descriptive keywords in your image file names (e.g., womens-red-floral-dress.jpg instead of IMG_1234.jpg) and ensure they are in a logical URL structure.

How Does Visual Search Enhance User Experience and Personalization?

Visual search fundamentally enhances the user experience by making product discovery more intuitive and seamless. By removing the search friction associated with text-based queries, it provides a direct path from a moment of visual inspiration to a shoppable product. This shift eliminates guesswork, reduces search abandonment, and makes online shopping more accessible and enjoyable by catering to the natural human tendency to think and remember visually. Instead of forcing users to conform to the limitations of a search bar, it adapts to their context.

Using Visual Data for Hyper-Personalization and Targeting

Beyond immediate product discovery, the data generated by visual search is a goldmine for understanding customer preferences on a much deeper level. Every image a user uploads is a rich data point that reveals their specific aesthetic tastes—the colors, patterns, shapes, and styles they are drawn to. This visual data provides far more nuanced insights than traditional keyword searches, enabling businesses to move beyond broad categories and into precise targeting and audience segmentation.

This wealth of data directly powers sophisticated visual recommendation engines. These AI-driven systems can suggest products that perfectly match a user's individual taste, creating hyper-personalized digital experiences.

The Critical Importance of Privacy and Data Ethics

While this user-generated visual data is a "goldmine" for personalization, it also represents a profound responsibility and a significant legal risk. Treating this data carelessly is not an option in the modern regulatory landscape. Before implementing a visual search feature, every business must be able to answer these critical questions:

- Data Storage and Security: Where are user-uploaded images stored, and how are they secured? Are they encrypted both in transit and at rest? Who has access to them?

- User Consent and GDPR: Do you have explicit consent from users to store and analyze their images? A clear privacy policy is non-negotiable, especially under regulations like GDPR, which impose strict rules on processing personal data. An image taken in a user's home can easily contain personal information.

- Data Usage for Training: Are the images users upload being used to train other AI models? If so, is this clearly communicated, and have users consented to it?

- Data Retention Policy: How long are these images stored? Is there a clear policy for their deletion after a certain period?

Ignoring these questions is not just unethical; it's a legal liability. Building customer trust requires a transparent and secure approach to handling their data from day one.

In conclusion, visual search represents more than just a novel feature; it is a fundamental evolution in how consumers interact with online retail. By leveraging the power of AI and computer vision, it can solve the chronic problem of the 'keyword gap,' transforming a potentially frustrating search process into an intuitive and engaging experience. For Shopify store owners, this technology offers a path to higher engagement and deeper customer insights, but it must be approached with a clear understanding of its costs, limitations, and ethical responsibilities. Implementing a tool like Rapid Search is a starting point, but the true goal is to optimize the entire product discovery journey in a way that is both effective and trustworthy.